Saturday, April 26, 2008

Going Viral

Thursday, April 17, 2008

Oops, Vogue is racist

Saturday, April 12, 2008

Lecture of a Lifetime

This man used to stalk me.

Everywhere I went, he went.

Normally I would have been extremely disturbed by such behavior however in this particular case postponed alerting the authorities due to the unique circumstances of the situation.

My first encounter with Randy Pausch occurred a few months ago as I was watching CNN recap their list of people to remember from 2007. Amongst the list of innovators, heroes, and activists highlighted was Pausch. I briefly watched the segment and gathered the basics of his story-he was a professor at Carnegie Mellon University who had given a "last lecture" in September that became an international sensation when it was broadcast online-before I changed the channel and proceeded to watch a rerun of Gossip Girl.

My second run in with Pausch happened a little over a week ago when I was checking my email. As I looked through my messages, one from Borders Books entitled "This Year's Most Inspiring Book" caught my eye. Curious as to what this well of inspiration was , I opened the message expecting to see yet another title recommended by Oprah's book club. Surprisingly enough I was not met with Middlesex or The Measure of A Man but The Last Lecture, a book written by, you guessed it, Randy Pausch.

My third, and final, encounter with Pausch came last Sunday at brunch. As I settled down at the dining room table to enjoy my eggs and fruit, I picked up the LA Times and proceeded to sift through the newspaper, picking out my favorite sections. When I plucked out the Parade I once again came face to face with Randy Pausch, whose face was plastered on the cover.

By this time I had just about had it, so I decided to read the enclosed article to find out exactly what made this man was so special and why he and his story had infiltrated every corner of my life.

Last year 47 year-old Randy Pausch agreed to give a "last lecture" at Carnegie Mellon University, the institution at which he was a professor of computer science. The "last lecture" was not literally to be his last lecture, but rather a talk in which the he was to think about what matters most to him and what wisdom he would share with the world if he knew it was his last chance to do so. Last lectures are given at many colleges and universities around the world however Pausch's was especially unique in that a few weeks after being asked to talk, he learned that he was terminally ill with pancreatic cancer and only had a few months to live. With this discovery, the nature of his last lecture changed, as it truly became his final goodbye to his students. Rather than throw himself a pity party and cancel his commitment, this married father of three delivered his talk "How to Live Your Childhood Dreams" last September in which he discussed how he achieved his childhood dreams and provided realistic advice on how others can live their lives so that they could make their childhood dreams come true, too.

Although Pausch's lecture/book are full of heartening tales and advice, I picked out 3 of my favorite lessons of his to share:

1. Dare to Take A Risk

In a virtual-reality course that Pausch taught he encouraged students to attempt hard things without worrying about the risk of failure. At the end of the semester, Pausch presented "The First Penguin Award", a stuffed penguin, to the group that had taken the biggest gamble while not meeting their goals. He derived this award from the idea that when penguins jump in waters that are potentially full of predators, one of them must take the risk and be the first to jump in. Pausch contends that "experience is what you get when you don't get what you wanted" and is one of the most valuable things anyone has to offer.

2. Look for the Best in Everybody

Pausch learned this lesson from his friend, and hero, Jon Snoddy at Disney Imagineering. It was Snoddy who told Pausch that is he waited long enough, people would surprise and impress him. From this Pausch realized that when one is angry and frustrated with someone else, it may be because that person hasn't been given enough time. Although it takes great patience and can take many years, Pausch reaffirms Snoddy's assertion that "In the end, people will show you their good side" and that is you "just keep waiting, it will come out."

3. Dream Big

"I was born in 1960. When you're eight or nine years old, and look at the TV set, and men are landing on the Moon, anything is possible. And that is something we should not lose sight of: Inspiration and the permission to dream is huge." -Randy Pausch, 2007

This lesson is perhaps the most simple, yet most rich of them all. Pausch urges all people to not only give themselves permission to dream, but to fuel their kids dreams , too. This particular lesson really hit home with me because as Daily Kos reflected , "we are all busy chasing down something important" and always reason that "there's always time to investigate this later." In the process of doing so, we "begin to overlook the important stuff as we chase the next buck, or the next big thing." I can totally relate to this statement in that I feel like I'm always worrying about what I have to do next, where I'm supposed to go, and what I should be doing to get there (in high school, it was college, in college it's grad school, and in grad school it's the job force) that I've lost sight of the things that I used to dream of doing and have stopped pausing to truly enjoy and experience the things I want to do for much longer than a mere second.

Randy Pausch is truly an inspirational individual. Despite being terminally ill with pancreatic cancer, Pausch has maintained a purely positive outlook on life and unselfishly shared it with the world not for the money, or for the fame, but for his kids, as he believed hat by doing so he was putting himself in a bottle that would "one day wash up on the beach" for his children long after he has gone. His story not only touched my heart, but really reminded me to take a step back and remember what is really important in life and to live it to its fullest every day before it's too late.

Watch Pausch's Lecture Below (I urge everyone to take an hour out of their day to do so):

Saturday, April 5, 2008

Fight Night

Forget Mayweather v. Big Show, I'm more interested in the Mariah Carey and Elvis Presley throwdown.

This week Mariah Carey KO'd the King of Rock when she surpassed him in producing the most No. 1 singles on the Billboard Charts This accomplishment comes courtesy of her 18th single Touch My Body, which is also is No. 1 on the trade magazine's digital download chart. Carey now only takes a backseat to the Beatles who sit at the top spot with 20 number 1 singles.

Although I'm not the biggest Mariah aficionado (or the biggest fan of the horrendously cheesy aforementioned song) I have to admit the girl can sing. For the past 18 years, her pipes have granted her the ability to turn out hit song after hit song, a feat which takes more than just good marketing strategy and luck. Presley fans are a little less likely to admit so, as they have gotten their white sparkly jumpsuits all knotted up in anger over his defeat.

And all over what?

So what if Mariah Carey has more #1 hit singles than Presley? Those numbers say nothing about the quality or lasting impact that each respective artist has had, or will continue to have, on musical culture. Just as KRTH-FM DJ Gary Bryan stated "Mariah Carey is a terrific singer, and this is a great accomplishment, but you can't quantify someones place in music history by chart statistics. Some people reflect their time and some people define their time. Mariah is a reflection of her time...Elvis on the other hand defined his time, much as the Beatles later did. Mariah doesn't have that kind of iconic stature." Los Angeles Times reporter Randy Lewis was able to effectively illustrate this point when he stated that there are no velvet Mariah Carey paintings, nor are there "couples racing to Vegas chapels to be wed by Mariah impersonators. Yes, it is true that Mariah Carey is a pop icon of our time, but she will hardly be able to transcend the times in the manner that Presley did simply because she is not the pioneer that he was.

Despite the Presley fan backlash, Carey has remained a humble and gracious winner. In fact, Carey touched base on what Bryan and Lewis stated by saying that although she was happy and grateful she could never really put herself " in the category of people who have not only revolutionized music but also changed the world" because that was " a completely different era and time."

And if Presley fans just can't get past the numbers, then they should chew on this. On researcher Joel Whitburn's list of Top 500 artists for albums and singles (which is determined on a sliding scale in which musicians are given points for each recording that reaches Number 1, 2, etc.) Elvis comes in first on both the Record Research chart an Album Ranking chart.

2 out of 3 Billboard titles for the King ain't shabby, especially since majority rules.

Rematch, anyone?

Saturday, March 29, 2008

T is for Tienanmen, O is for Olympics & C is for China...and Censorship

In the wake of recent social unrest among Tibetans and the rise in feat of protests China, the host nation of the 2008 Summer Olympic Games, has recently informed broadcast officials that live television shots from Tienanmen square will be banned during the Beijing Olympics. Tienanmen Square is often regarded as the "face of China" and is primarily known as being the site of a famous pro-democracy protest in which Chinese troops were called in and launched a deadly assault on the demonstrators.

China's recent decision will not only disrupt the plans of NBC and other major international networks who have paid hundreds of millions of dollars to broadcast the Games but could also potentially alienate the estimated half-million foreigners that will be attending. It has also been stated that these " heavy-handed measures" could potentially undermine Beijing's pledge to the International Olympic Committee that the Games would promote greater openness in the formerly isolated country.

Many activist groups have stated that they planned to use the Olympics to promote their causes for months, however China is be hell-bent on preventing this from occurring. Foreign activist groups are also looking to use the Olympics as a stage on wish to get their voices heard. In fact, one specific group of foreign activists who are angry about China’s support for Sudan in the civil war in Darfur have recently stated that they would be demonstrating in Beijing during the Games.

Live broadcasts from Tienanmen Square were intended to showcase a friendly and confident China, a China that had put the deadly 1989 military assault in the past however with this measure, the events of late have clearly shown that China is anything but friendly, and far from confident.

As for the political future of China, I'm going to take Frankly My Dear's advice and not get too excited about Chinese politics becoming transparent or fair anytime soon.

I'll save my excitement for Women's Gymnastics.

Saturday, March 22, 2008

Why 5 Fingered Discounts are not the BEST idea...

Last week, upon hastliy stealing a packet of meat from a Dutch supermarket an unnamed sticky-fingered shoplifter forgot to leave the scene of the crime with one critical piece of evidence:

his 12 year old son.

The 45-year-old thief dashed to his car faster than Asafa Powell, swatted away a supermarket worker who had flung himself on the vehicle's hood in an attempt to stop the escape, and left behind his own kid in the midst of the ruckus.

**Let's pause for a second and diverge from the main issue at hand and reflect on the actions of the supermarket employee. It's one thing to recognize when a crime has occurred and take the appropriate measures of calling in the local authorities to handle the case, but it's another thing to recklessly toss oneself onto the hood of an unknown criminals car...for a slab of MEAT.

Not diamonds, not dollars, but MEAT.

Need I say more?**

Anyways, upon contacting the thief through the boy, the thief not only refused to collect his son, but told the officers to get a hold of the boy's mother. Although the man later turned himself in on Thursday, I highly doubt that upon the stolen meat will be the biggest of his problems...

All I can say is that the steak or chops BETTER have been worth it.

Saturday, March 15, 2008

Do your pants hang low...do they sag, do they flow?

Surprisingly enough the bill's primary sponsor, Orlando Senator Gary Siplin, is a Democrat....yes, you heard me, a D-E-M-O-C-R-A-T. Siplin has justified his support of the measure on the grounds that this fashion statement has a not so glorious origination, as he alleges it was made popular by rap artists after first appearing among prison inmates as a signal they were looking for sex. (How he knows this for fact, I dare not ask...especially with the recent Spitzertastic events of the past week...)

Notes on a Scandal

Face it. Elliot Spitzer is not the first politician, let alone man, to be involved in a sex scandal and he certainly won't be the last. Tales of infidelity have littered American politics as far back as the nation's founding and have continued to transpire throughout history. While the beginning of the 19th century had Thomas Jefferson and Sally Hemings , the 1960's had John F. Kennedy and Marilyn Monroe, and the 1990's had Bill Clinton and Monica Lewinsky...

and Paula Jones...

and Jennifer Flowers.

And now, the beginning of the 21st century has Eliot Spitzer and Ashley "Kristen" Alexander Dupre.

Same scandalous tune, different politician, different year.

So if America has heard it all before, why all of the commotion?

Although Sptizer's scandal differs from the aforementioned cited politicians in that they did not explicitly pay for sex or sexual favors, or at least didn't do as as far as the public knows, this is hardly something Spitzer should be condemned for. In fact, in comparison to his fellow scandalites, Spitzer should be applauded for his decision to engage in extramarital relations with a prostitute rather than entertain a mistress.

Well, maybe not applauded, but certainly not branded with a scarlet letter for all of eternity.

While the recklessness of Spitzer's actions may suggest otherwise, the Princeton and Harvard Law alum is no dummy. In opting to carry on with a prostitute, Spitzer transformed an act of transgression into a transaction of business. In business, money or collateral is exchanged for good and/or services. In Spitzer's case, money was exchanged for sexual services from Dupre. In essence, the arrangement was really very cut and dry and not nearly as complicated as affairs with mistresses tend to be since the x factor-the emotional connection-is missing. While it is true that employing a prostitute was against the law, if one were to isolate the legality of the circumstances from the situation, than it would be seen as nothing more that what it fundamentally was-just business. Whether or not Sptizer would still have his job had he chosen to follow in Clinton's footsteps remains unclear however I'd be willing to be that even if he could save his job, he's have an even less likely chance of saving his marriage.

With this said, it's not nature of the act that Spitzer committed that has disturbed Americans, but rather the greater meaning and implications it has for American society. American's are preoccupied with Spitzer's case not necessarily because they find it to be horrendously disgraceful and blasphamous but because it hits a little too close to home, as it challenges the true and untainted morality and virtue many American's believe they possess. It's no secret that Spitzer, the "Sheriff of Wall Street" had built his reputation as a "paragon of virtue" by hunting down financiers and breaking up prostitution rings around New York City. Immediately following the scandal critics branded Spitzer a hypocrite, a fraud, and a phony often forgetting that it was not Spitzer who proclaimed himself to be cut from the most morally correct cloth, but rather, the public for interpreting his actions and words to mean so. What Spitzer did (in office) was not who he was, and whether Americans are willing to admit it or not, this is greatly unsettling as we oft believe our actions to be a direct manifestation of our character.

Saturday, March 8, 2008

The "R" Word

Of course, it didn’t take the actual occurrence of the economist drafted definition of a recession as two consecutive quarters of negative growth in the nation's gross domestic product for most Americans to recognize that the economy is on a one stop train ride to Recessionville as constant reminders of the economy’s growing fragility are everywhere. To many, the rise in mortgage defaults by "subprime" customers who were issued loans despite patchy credit histories during the last housing boom is sufficient evidence in itself, while to others the ever-increasing cost of gasoline, college, and health care raises warning flags, and to some the seemingly trivial rise in the price of a single tomato to $1.79 is enough to launch into a panicked frenzy.

Whatever the reasoning may be behind one’s belief that the R word’s presence in the United States is anything but a fantasy, it is clear that the issuance of Buffet’s statement not only served as a confirmation of sorts, but finally allowed the alarm bells that have been haphazardly muffled by President George W. Bush to ring loud and clear across the nation. Although Bush has repeatedly attempted to reassure Americans that the U.S. economy is not headed into a recession and is merely experiencing a slowdown in growth, the data has suggested otherwise. As bank after bank suffers with bad mortgages, multitudes of companies have continued to sharply chop profit forecasts in recognition that American consumers are too neck deep in debt to purchase the next new vehicle or plasma television. As this occurs, the value of the dollar continues to crash and burn, thus prompting businesses to cut investments and consumers to further seal up their already relatively tight pockets.

As American consumers become increasingly frugal, the global marketplace will inevitably begin to suffer. It has long been held that when the United States sneezes, the rest of the world catches a cold, however with this particular outbreak of the R word, the world is positioned to catch more than just a few sniffles. Perhaps the best diagnosis of the United States’ economic troubles and the impact it will have on the global economy was best issued by renowned economist and New York University professor Nouriel Roubini. Roubini stated that at this critical time “the US will not experience just a case of a mild common cold; it will rather suffer of a painful and protracted episode of pneumonia” thus resulting in a serious “real and financial contagion to the rest of the world.” While it is true that the United States only produces 5% of the world’s population, it still controls over a quarter (26%) of the global economy. Although some analysts have suggested the decreasing of dependence on American consumers in order to prevent the effects of the impending recession from spreading, to do so would not provide the miracle remedy international markets have been hoping for. Because the United States is not only a key direct trading partner, but crucial indirect trading partner as well, countries that have little or no contact with US markets would still feel the stinging pinch of the downturn of the American economy as they heavily depend on countries that are directly dependent.

As American consumers become increasingly frugal, the global marketplace will inevitably begin to suffer. It has long been held that when the United States sneezes, the rest of the world catches a cold, however with this particular outbreak of the R word, the world is positioned to catch more than just a few sniffles. Perhaps the best diagnosis of the United States’ economic troubles and the impact it will have on the global economy was best issued by renowned economist and New York University professor Nouriel Roubini. Roubini stated that at this critical time “the US will not experience just a case of a mild common cold; it will rather suffer of a painful and protracted episode of pneumonia” thus resulting in a serious “real and financial contagion to the rest of the world.” While it is true that the United States only produces 5% of the world’s population, it still controls over a quarter (26%) of the global economy. Although some analysts have suggested the decreasing of dependence on American consumers in order to prevent the effects of the impending recession from spreading, to do so would not provide the miracle remedy international markets have been hoping for. Because the United States is not only a key direct trading partner, but crucial indirect trading partner as well, countries that have little or no contact with US markets would still feel the stinging pinch of the downturn of the American economy as they heavily depend on countries that are directly dependent.

Now, Unless some miracle vaccination that will immunize the nations that control the remaining 74% of the global economy from the United States’ economic troubles has been invented without my knowledge, I would suggest that they, too, take off their headphones, stop denying the existence of the alarms, and cooperatively strategize to overcome this tremendous problem before they, too, fall fatally ill because the chances are if it looks like a recession and sounds like a recession, it must be a...

Blame B.F.

I, like many others, remain extremely disgruntled about the pending loss of an hour of sleep that I will experience early tomorrow morning as Daylight Savings takes place. Over the past 20 years, I have conditioned myself to look forward to the addition of an extra hour of sleep in the fall, and have learned to loathe the fateful spring day when my precious hour will be lost, never giving much thought to the cause and reason behind the concept...until now.

I, like many others, remain extremely disgruntled about the pending loss of an hour of sleep that I will experience early tomorrow morning as Daylight Savings takes place. Over the past 20 years, I have conditioned myself to look forward to the addition of an extra hour of sleep in the fall, and have learned to loathe the fateful spring day when my precious hour will be lost, never giving much thought to the cause and reason behind the concept...until now. Who was the culprit responsible for responsible for this cruel and unusual punishment?

What type of person would conjure up a concept that robs millions of people of an hour of their most valued resources?

How could anyone do such a terrible thing?

The who, surprisingly enough, turns out to be none other than Benjamin Franklin...yes, the Benjamin Franklin. Apparently

Although it has been affirmed that the primary purpose of changing our clocks during the summer months to move an hour of daylight from the morning to the evening is to make better use of daylight, Daylight Saving Time has garnered its fair share of critics.

While my reasons for disliking Daylight Savings are entirely selfish, others have questioned its purpose, citing that it's energy saving reasoning is fundamentally flawed. These critics have argued that the alleged energy that is made by DST is offset by the energy used by air conditioners and cooling devices that those living in warm climates to cool their homes during hot summer afternoons and evenings. Other critics have asserted that more evening hours of light encourages people to run more errands and visit friends, thus resulting in the consummation of more gasoline.

Distaste for DST has also been expressed by people whose schedules are tied to sunrise, such as farmers, as it often takes animals a few weeks to adapt to the new schedule. Parents have expressed concern that early morning dangers are more abundant with the enactment of the Spring Daylight Saving Time, as children are less visible as they cross roads and wait for school buses in the darkness.

Now, it's impossible to say definitively say that Franklin knew what the long term repercussions his ideas would have on the sleep cycles of generations of people would be, but I can't help but think that upon drafting An Economical Project at the ripe old age of 78 years, he couldn't have cared less, as he would soon be enjoying the deepest slumber of them all.Saturday, March 1, 2008

Classically Crazy

Believe it or not, but this man did not escape a mental institution.

In fact, the only thing this man is crazy about is....

classical music?

At 26 years old, Venezuelan native Gustavo Dudamel is the reigning king of classical. There is no doubt that he is an unlikely contender, as it seems that he has barely given up bottle-feeding, however his impressive skill and natural ability put any speculation to rest.

Born in

Dudamel's big break came in 2004 when he was declared the winner of a major conducting competition. A mere two years later in 2006, at 25 years old, he was offered

Now, I don't know much about classical music, let alone conducting, but as Eden Harrell asked in Time magazine: What makes Dudamel so special?

His mop of wild curls?

His rock star looks?

His relatively young age?

According to Ed Smith, the managing director of the Gothenburg Symphony Orchestra, what makes Dudamel so unique is his ability to conduct in two directions by communicating with musicians and the audience at once. One of Dudamel's mentors, Simon Rattle, also acknowledged Dudamel’s extraordinary ability, calling him "the most astonishingly gifted conductor I have ever come across." Daniel Barenboim and Claudio Abbado, two other giants of European conducting, showered similar praises on Dudamel, further evidencing that the hype surrounding this young prodigy is not based on fiction, but fact.

Now call me crazy, but after taking a listen to a few pieces, I too, have found myself a bit smitten.

Sunday, February 24, 2008

Vanishing Act

Because such thinking was communicated in an unembellished and straightforward manner and was additionally highly accessible to the general public, public intellectuals garnered a large audience and continued to prosper well into the 20th century. As the golden years of the 1950’s came to a close, an alarming change occurred with the ushering in of 1960’s and 1970’s. Consensus between individuals dissolved, “proliferating theoretical schemata” occurred, and the guiding light of one’s elders was no longer followed. It was at this critical time that Jacoby contended “smart young people decided not to write well” and began to employ jargon in their conversations and publications. As these public intellectuals built their careers, they began to solely seek tenure and talked only to one another while “construing their own texts as radically democratic in spirit and subversive of the established order.” As this shift took place, Jacoby avers that something disappeared from American discourse and the “give-and-take” of serious discussion was damaged. Although ideas still circulated, they only did so in narrow channels, fostering an unhealthy environment in which the publics critical intelligence was enfeebled. Public intellectuals retreated and essentially became inbred, thus robbing the general public of the ability to interact, converse, and learn from them.

Because such thinking was communicated in an unembellished and straightforward manner and was additionally highly accessible to the general public, public intellectuals garnered a large audience and continued to prosper well into the 20th century. As the golden years of the 1950’s came to a close, an alarming change occurred with the ushering in of 1960’s and 1970’s. Consensus between individuals dissolved, “proliferating theoretical schemata” occurred, and the guiding light of one’s elders was no longer followed. It was at this critical time that Jacoby contended “smart young people decided not to write well” and began to employ jargon in their conversations and publications. As these public intellectuals built their careers, they began to solely seek tenure and talked only to one another while “construing their own texts as radically democratic in spirit and subversive of the established order.” As this shift took place, Jacoby avers that something disappeared from American discourse and the “give-and-take” of serious discussion was damaged. Although ideas still circulated, they only did so in narrow channels, fostering an unhealthy environment in which the publics critical intelligence was enfeebled. Public intellectuals retreated and essentially became inbred, thus robbing the general public of the ability to interact, converse, and learn from them. The validity of Jacoby’s tale can be affirmed by simply taking a closer look at modern day American society. The concept of a public intellectual is not one that is familiar to many who do not bear the title, evidence of its fleeting significance and import. Before public intellectuals can effectively reestablish themselves in the minds of the public, a solid foundation on which an understanding of the public intellectual will be constructed must be established. By answering the basic fundamental questions of "who?" and "what?" the framework for such a foundation can be laid. Who is a public intellectual? Who are public intellectuals talking to? What is the role of the public intellectual in the greater world? What must a public intellectual do to fulfill this role?

Defining who qualifies as a public intellectual is a daunting task, as the question is highly subjective and produces an infinite number of answers, each of which is legitimate in its own right. Although I personally believe that an intellectual is anyone who considers himself or herself to have intelligence on any issue which he or she happens to take interest in, it appears that a large number of individuals (many self-perceived public intellectuals themselves) have put intellectuals on a higher pedestal by severely narrowing & tailoring the definition in a seemingly elitist measure made to separate the sophisticated intellectuals from the “rest” of apparently unintelligent society.

Deciding what makes a public intellectual public is yet another difficult question to address. Through the use of deductive reasoning, I have come up with my own definition of public.

“Public” infers that something is not “private.” In order to make something not private, it must be seen by an individual other than the original producer or author of thought. Keeping these two fundamental truths in mind, I found it safe to assume that an intellectual officially becomes public when he or she shares his thoughts, ideas, or works with any person or groups of persons other than him or herself.

Although the debate concerning the definition of the term “the public intellectual” could go on for all eternity, I have chosen to forgo my own personal definition and apply Ralph Waldo Emerson’s widely accepted characterization of a public intellectual to Jacoby’s tragic tale in hopes of arriving at a possible explanation for why the disappearance has, and is, occurring in America.

In the great essay “The American Scholar” Emerson pondered the meaning and purpose of the public intellectual before concluding that a public intellectual is an individual who recognizes the important of the past, yet is able to free his thoughts from the box which history creates. In doing this, Emerson's intellectual is not only able to “preserve great thoughts of the past” bought communicate them and utilize them as a springboard for new ideas. As these new thoughts are formulated, Emerson’s public intellectual not only shares them with fellow intellectuals, but communicates them to the world out of an obligation to oneself. The presentation of a public intellectuals material to the public is essential, as Emerson contends that an intellectual “can be relatively ineffective on a widespread scale regardless of the level of their expertise or knowledge."

Taking Emerson’s definition into consideration in the context Jacoby’s story, it can be concluded that both the public intellectual and the general public are mutually responsible the public intellectuals alleged disappearance. As Jacoby stated, following the 1950’s people began turning deaf ears to the works of public intellectuals, thus prompting public intellectuals to seek recluse and only share the fruits of their knowledge and work with one another. In doing so, the public intellectual became less “public” and in essence, became more of a “private intellectual.” Therefore, the public did not necessarily entirely disappear from society altogether, but rather transformed into something else completely?

So who is to blame for starting the vicious cycle of the public not listening and the public intellectual subsequently transforming and disappearing?

In the essay entitled “Wicked Paradox: The Cleric as Public Intellectual” Stephen Mack, a professor and scholar hailing from the University of Southern California, stated that “The measure of public intellectual work is not whether the people are listening, but whether they’re hearing things worth talking about.” Upon analyzing this statement I was able to arrive at an answer to the aforementioned question-the demise of the public intellectual was not instigated by the public, but by public intellectuals themselves. Because public intellectuals allegedly possess knowledge superior to non-public intellectuals it is safe to assume that part of this knowledge would entail recognizing the greater ultimate significance of one's work, even if no one else recognizes it or appears to care about at a particular moment in time. This has not been the case, as the egos of public intellectuals became bruised when people appeared to start not caring, prompting them to draw back from mainstream society and consequently starting the vicious cycle of their demise.

Until the public intellectual can stop licking their wounded egos and re-expose themselves and their works to the public, their relative extinction is inevitable. And self-extinction, well, that's not so intelligent.

Saturday, February 23, 2008

Dic-tator Tots

Why were these four men fussing over this particular edition?

Why were these four men fussing over this particular edition?Enclosed in the issue was the magazine's annual top 10 list of the worst dictators of 2008, of course!

And just what could have they possibly done to warrant the acquisition of this coveted title?

Blatantly abuse their power? Check!

Suspend elections and essentially erase the civil liberties of their people? Double Check!

Routinely torture and jail citizens? No che-Who am I kidding? CHECK!!!

In addition to sharing the aforementioned characteristics Jintao, Afeweki, Jong-il, and Khamenei are alike in yet another way- they all have discovered the most powerful weapon of them all.

Nuclear bombs? Nope.

Biological warfare? Try Again.Censorship? Ding! Ding! Ding!

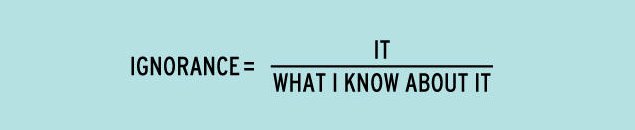

Throughout history censorship has been used to control the hearts, minds and actions of citizens, and such practices have continued well into the 21st century. It, as illustrated below, is a common feature of dictatorships and other authoritarian political systems. Unlike in the United States and other Westernized countries, freedom of speech is not regarded as a fundamental right or liberty of the people. Jintao, Afeweki, Jong-il, and Khamenei have all heavily employed censorship as one of the primary methods to assert and maintain control of their people.

Let's take Kim Jong-il of North Korea for example. Coming in as Parade's #1 worst dictator of 2008, he has successfully managed to run the most isolated and repressive regime in the world. His ability to do so is largely due to the inability of North Korean citizens to access information other than what is provided by the government. Because the nation essentially operates on propaganda, Jong-il maintains an extremely tight control of expression. In fact, his control of expression is so tight that Hu Jintao's China seems free in comparison.

Let's take Kim Jong-il of North Korea for example. Coming in as Parade's #1 worst dictator of 2008, he has successfully managed to run the most isolated and repressive regime in the world. His ability to do so is largely due to the inability of North Korean citizens to access information other than what is provided by the government. Because the nation essentially operates on propaganda, Jong-il maintains an extremely tight control of expression. In fact, his control of expression is so tight that Hu Jintao's China seems free in comparison.Although Jintao's administration pales in comparison to Jong-il's regime in terms of censorship, Jintao is still no saint. With 42 journalists in jail, constant policing, and deletion of political speech, censorship in China has never been greater. Last year, Jintao not only increased censorship, but cracked down on human-rights activists, forced abortions, limited the practice of religion, and asserted control over all media.

Across Asia in Iran Sayyid Ali Khamenei and his council adopted increasingly repressive measures in an effort to continue to maintain control of Iranian citizens. In 2007, officials not only persecuted dissidents and shut down music studios and cafes, but stoned a man to death or adultery and carried out public hangings.

South of Iran in Africa, Isayas Afewerki implemented a ban on privately owned media, thus making

came shortly after the press covered a split in the ruling party, as it provided a forum for debate on Afewerki’s rule.

The complete list of 2008's top 10 dictators is definitely worth taking a look at. Perhaps you'll see some familiar names, or a few familiar faces. If your favorite dictator didn't make the cut, check the runners-up, and if he or she is still nowhere to be found....

there's always next year.

Tuesday, February 12, 2008

Need a buck? Steal a painting.

Last Sunday, in what has been dubbed as "one of Europe's greatest art heists", masked robbers brandishing handguns stole four paintings by Cezanne, Degas, van Gogh and Monet from the private Buehrle Collection at a museum in Zurich, Switzerland. The paintings, “Poppies near Vetheuil” by Monet, “Count Lepic and His Daughters” by Degas, “Blossoming Chestnut Branches” by van Gogh and “The Boy in the Red Vest” by Cezanne, were worth an estimated $164 million dollars and were stolen by three men shortly before the museum closed on Sunday afternoon. While one of the robbers forced visitors and employees to lay on the ground at gunpoint his two accomplices nabbed the 19th century treasures. They proceeded to flee the scene in a white van with a painting possibly sticking out of the back of the vehicle.

Surprising, yes. Tragic, no.

As passive as it sounds, I haven't the slightest bit of sympathy for the museum and it's directors. I mean come on, the thieves walked in through the main entrance, at 4:30 p.m., on a Sunday afternoon and only had to use a mere handgun as leverage to nab the paintings. Now, I’m not suggesting that anyone should have taken a bullet for the artwork- that would have just been absurd. I am suggesting that perhaps this gallery (and others like it) amp up their security by investing in a little thing called a metal detector, tossing in a few more cameras, and beefing up their squad. It's a wonder the museum directors didn't simply leave the paintings on the sidewalk and allow passerby’s to take them on a first come first serve basis given the lack of security that was displayed.

In a news conference following the robberies the “devastated” event museum director Lukas Gloor said that the paintings were displayed behind glass panels and that an alarm was triggered as soon as they were touched. Congratulations, an alarm was present. 2 gold stars and a pat on the back for Mr. Gloor.

What use is an alarm if it does nothing to aid in the protection of the paintings?

Maybe it's just me, but I would presume that upon hearing of a fellow gallery being robbed that other galleries and museums would take caution and amp up the security in their own buildings, but then again, perhaps Mr. Gloor missed the memo.

I’m off to MOCA…I spotted a piece that might go for a pretty penny on the black market.

Thursday, February 7, 2008

Mass Media Monarchy

One of the most notable instances in which the marketplace demonstrated a firm control of the creative freedom of mass media occurred in the music industry at the end of the 20th century with the reemergence of boy bands. The concept of a boy band was nothing new, as it had roots dating back as early as the 1960’s, when the Monkees and the Beatles ruled the industry. With each successive decade came a new, refurbished version of the boy band-the 1970’s had Menudo, the 1980’s had New Kids on the Block and New Edition, and the 1990’s had the Backstreet Boys, N*Sync, and 98 Degrees, amongst many others. These bands incited a craze that not only dominated the airwaves, but filled the newsstands and adorned the bedroom walls of millions of teeny boppers around the world. Although each respective band of the late 90’s had its own distinct name and projected image, all were derived from what was more or less the same general formula. The groups typically featured several young male singers in their mid to late teens or early twenties who, in addition to singing, danced to highly choreographed numbers. Ironically musical instruments were rarely played by members, thus making them more of a vocal group than a band. Backstreet Boys, O*Town and other bands of this era were not spontaneously created, but were methodically contrived by talent managers and producers-such as the notorious Lou Pearlman of Trans Atlantic Records- who, upon realizing the potential to capitalize on a new generation of consumers, selected group members based on appearance, singing ability, and dancing skills. These boy bands were also classically characterized by changing their appearances to adapt to new fashion fads (they often donned flashy matching ensembles), following mainstream music trends, performing complex dances, and producing elaborate shows.

As important as the selection of the members of a boy band was, this alone did not determine the commercial success of the group. Record companies, aware of the desire for the consumer to “connect” with the group, capitalized on this by highlighting a distinguishing characteristic of each member and portraying him as having a certain image. By branding members with particular personality stereotypes such as “the shy one” or “the rebel” individuals were able to identify with a member or multiple members of a group on a more personal level and record companies were able to strategically reel consumers. A perfect example of this occurred with the group N*Sync. With blond curly hair, piercing blue eyes, and a sweet high pitched voice, Justin Timberlake the youngest of the group, was branded as “the baby” of the group. Timberlake subsequently attracted a large fan base of very young girls that identified with this stereotyped image whether it be because Timberlake was the closest to their own age or simply because they bought into his projected sweet persona. Chris Kirkpatrick, another member of N*Sync, was Timberlake’s image foil; 10 years his senior, Kirkpatrick sported facial hair, unusual dreadlocked hairdo, and large hoop earrings, Kirkpatrick was often dubbed as “the crazy one” and thus, attracted a slightly older fan base of girls who could identify with this particular persona.

were able to identify with a member or multiple members of a group on a more personal level and record companies were able to strategically reel consumers. A perfect example of this occurred with the group N*Sync. With blond curly hair, piercing blue eyes, and a sweet high pitched voice, Justin Timberlake the youngest of the group, was branded as “the baby” of the group. Timberlake subsequently attracted a large fan base of very young girls that identified with this stereotyped image whether it be because Timberlake was the closest to their own age or simply because they bought into his projected sweet persona. Chris Kirkpatrick, another member of N*Sync, was Timberlake’s image foil; 10 years his senior, Kirkpatrick sported facial hair, unusual dreadlocked hairdo, and large hoop earrings, Kirkpatrick was often dubbed as “the crazy one” and thus, attracted a slightly older fan base of girls who could identify with this particular persona.

The heavy reliance of record companies upon the aforementioned stereotypes left little, if any, creative freedom to groups and rendered members essentially unable to venture outside of the box created for them. The key factor of boy bands was to remain trendy and therefore the band was expected to conform not only to the most recent fashion, but musical, trends in the popular music scene. Because the majority of the music produced was written and produced by producers who worked with the bands at all times, they were the ones who not only projected the next smash hit, but controlled the group’s sound in a manner in which this would be possible. As the marketing and packaging of boy bands and their image began taking precedent over the quality of music which they were producing, the options which artists and consumers alike were offered became narrower and narrower. Rather than take the chance of venturing into the uncharted waters of the music by trying something new, producers and record labels became content selling the same sort of tunes to the same audience, as that is what was generating maximum profit. In doing so, a vicious cycle was created. Once mass media businesses recognized what types of songs were selling, boy bands were limited to singing and performing the same feigned emotional ballads and pop dance tunes to slightly altered beats, further limiting the musical options available to the public.

Although boy bands were able to make an easy transition into the early 21st century, the commercial success of the “pop” boy bands did not last long. The fan base for these groups grew up, their musical tastes evolved, and a new generation of consumers was established. As this evolution occurred, boy bands did not simply disappear, but once more reemerged with an all new image and sound. Gone were the days of gel tipped hair and cheesy color coded outfits, and in were the days of vintage duds, faux hawks, and eyeliner. The industry waved goodbye to LFO, O-Town, and LMNT, and readily embraced groups such as My Chemical Romance, Sum 41, and Good Charlotte, perpetuating the same old industry song of capitalization, but with a different punkier image and edgier tune.

Although boy bands were able to make an easy transition into the early 21st century, the commercial success of the “pop” boy bands did not last long. The fan base for these groups grew up, their musical tastes evolved, and a new generation of consumers was established. As this evolution occurred, boy bands did not simply disappear, but once more reemerged with an all new image and sound. Gone were the days of gel tipped hair and cheesy color coded outfits, and in were the days of vintage duds, faux hawks, and eyeliner. The industry waved goodbye to LFO, O-Town, and LMNT, and readily embraced groups such as My Chemical Romance, Sum 41, and Good Charlotte, perpetuating the same old industry song of capitalization, but with a different punkier image and edgier tune.

With this rebirth of punk in the boy band scene came the commercialization of yet another movement-indie. Up until a decade ago indie (short for independent) traditionally referred to any form of art-music, film, or literature-that was created without corporate financing and without mainstream influence. In the music industry, “indie” specifically referred to any music that had been produced and funded by any band or label that was not affiliated with major corporate labels such as Sony or Epic. Free from corporate control, indie artists and groups were not under the same profit-based shareholder pressure most record corporations placed on their artists to drive sales, and were consequently freer to release music that did not necessarily have the greatest commercial appeal.

Indie’s independence from corporate control did not last long, as companies began to catch on to the great commercial potential of this genre. Some have accredited the adoption of indie by corporations to the wide success of garage bands such as Nirvana, Pearl Jam, and

Once corporations began to show interest in the indie scene, many smaller music labels similarly grew eager for wider financial success, and began adopting “business practices of major labels once considered anathema in the scene” such as licensing songs to advertising companies and hiring PR firms and street teams to market their records. As this selling out occurred, the definition of indie as a culture in which the truly independent passion for music can be expressed has dissolved and became no more than a money driven branding tool aimed at marketing a particular image. Major labels have continued to use the indie name to continue to attract, and profit off of, a “different” demographic looking for a “different” type of music when in all actuality, indie music mainstream. The true essence of the genre has been lost, and all the consumer is receiving is more unoriginal music, once more exemplifying the narrowing of creative listening options available to the consumer.

The deterioration creativity and creative freedom in mass media due to commercialization is not exclusive to music, as it has had an equally heavy impact on the television and film industries. As the era of pop boy bands came to a close in music, a reality television revolution had begun to emerge. Reality TV, a “genre of television programming which presents purportedly unscripted dramatic or humorous situations, documents actual events, and features ordinary people instead of professional actors”, varies greatly as it encompasses a plethora of subgenres. The most popular subgenres have included Celebreality in which a celebrity is documented going about his or her daily life (The Osbournes, Newlyweds, The Simple Life), talent search shows in which the “next big thing” is to be discovered (American Idol, Pop Stars, America’s Got Talent), self-improvement shows (Extreme Makeover, Queer Eye For the Straight Guy), and job search shows in which pre-screened competitors perform a variety of tasks based on a common skill are judged by a panel of experts as a process of elimination narrows the playing field until a winner is declared (Project Runway, The Apprentice, The Shot).

Once the success of the first modern day reality television shows was observed by mass media businesses, networks wasted no time in rearranging their show lineups to make room for shows of their own, as each network was eager to cash in on a piece of the reality pie. As sitcoms and conventional drama series were pushed aside as more and more reality shows into development the quality and the variety of the programs which the general public was offered began to decline. Viewers were once more caught in a Catch-22 of sorts; they kept tuning in to reality programs because that was all that was offered by networks, and networks kept offering them because that is what drew ratings and revenue. And so the cycle continues.

The plaguing of music and television by mass media business strategy has also begun to plague the film industry, albeit at a slower pace. The cinema classics of the past greatly differ from modern day feature films, as the pursuit of profit has gradually taken precedent over quality and construction. Actors, producers, and critics such as Oscar Winning Sir Michael Caine have attributed the deterioration of films to the general “lacking in dialogue, character, and plot”. Over the years  like the music and television industries, has become a product of corporate interests aimed at generating the maximum amount of money possible, and has thus fallen subject to the same formulaic methods of production that were previously noted in the music industry. As the emphasis of production has shifted away from cohesiveness and fluidity, special effects, action, violence, and sex have become the determining factors in the success of a picture. Obvious patterns in the movies have emerged as studios note one film’s success and continue to emulate it in a slew of succeeding movies. Some notable patterns include the “teen queen” movies of the late 90’s (She’s All That, 10 Things I Hate About You, Clueless, Never Been Kissed, American Pie, Can’t Hardly Wait), superhero movies (X-Men Series, Spiderman Series, The Punisher, Hulk),“spoof movies” (Scary Movie Series, Date Movie, Epic Movie) and fantasy movies (Harry Potter Series, The Lord of the Rings Series, Chronicles of Narnia Series, Eragon, The Golden Compass, Pan’s Labyrinth). With the scrapping of the responsibility “to give audiences something better” the film industry has grown content churning out the same sorts of hits with the same basic plot lines to gain profit. In doing so, creativity is once more stifled, and the options of films which consumers are offered are significantly lessened.

like the music and television industries, has become a product of corporate interests aimed at generating the maximum amount of money possible, and has thus fallen subject to the same formulaic methods of production that were previously noted in the music industry. As the emphasis of production has shifted away from cohesiveness and fluidity, special effects, action, violence, and sex have become the determining factors in the success of a picture. Obvious patterns in the movies have emerged as studios note one film’s success and continue to emulate it in a slew of succeeding movies. Some notable patterns include the “teen queen” movies of the late 90’s (She’s All That, 10 Things I Hate About You, Clueless, Never Been Kissed, American Pie, Can’t Hardly Wait), superhero movies (X-Men Series, Spiderman Series, The Punisher, Hulk),“spoof movies” (Scary Movie Series, Date Movie, Epic Movie) and fantasy movies (Harry Potter Series, The Lord of the Rings Series, Chronicles of Narnia Series, Eragon, The Golden Compass, Pan’s Labyrinth). With the scrapping of the responsibility “to give audiences something better” the film industry has grown content churning out the same sorts of hits with the same basic plot lines to gain profit. In doing so, creativity is once more stifled, and the options of films which consumers are offered are significantly lessened.

Alberge, Dalya. "Caine hits out against today's 'banal' films." The Times Online. Sept. 2006.

Andrews, Catherine. "If it's cool, creative and different, it's indie." CNN.com.Oct., 2006.

"Boy band." Wikipedia, The Free Encyclopedia. 11 Feb 2008, 02:22 UTC. Wikimedia Foundation, Inc. 11 Feb 2008 <http://en.wikipedia.org/w/index.php?title=Boy_band&oldid=190525878>.

"Independent music." Wikipedia, The Free Encyclopedia. 10 Feb 2008, 13:17 UTC. Wikimedia Foundation, Inc. 11 Feb 2008 <http://en.wikipedia.org/w/index.php?title=Independent_music&oldid=190380470>.

Kaufman, Gil. "The New Boy Bands."MTV.com. Date Unknown.

Lamb, Bill. "Top 10 Boy Bands."About.com. Date Unknown.

Olsen, Eric. "Once again, boy bands say 'bye, bye, bye'." MSNBC. March, 2004.

"Reality television." Wikipedia, The Free Encyclopedia. 5 Feb 2008, 16:46 UTC. Wikimedia Foundation, Inc. 11 Feb 2008 <http://en.wikipedia.org/w/index.php?title=Reality_television&oldid=189285855>.

Rowen, Beth. "History of Reality TV." Infoplease.com. July, 2001.

Tuesday, February 5, 2008

Sheik of Chic Relinquishes Throne

Who is Valentino Clemente Ludovico Garavani? Here’s a hint- he’s not a Renaissance artist nor is he an Italian footballer.

Who is Valentino Clemente Ludovico Garavani? Here’s a hint- he’s not a Renaissance artist nor is he an Italian footballer.To many, Valentino Clemente Ludovico Garavani’s first name is all that is needed to recognize one of, if not the most, influential designers of the late 20th century. Famous for his signature bold red dresses and classic couture, Valentino has dressed the world’s elite including royalty, first ladies, and movie stars such as Nancy Reagan, Jacqueline Kennedy Onassis, Audrey Hepburn, and Elizabeth Taylor, amongst others.

His resume is just as impressive as his clientele-At 17, he moved to Paris where he studied at the Fine Arts School and at the Chambre Syndicale de la Couture Parisienne and by 29 he had served as an apprentice at Jean Desses and Guy Laroche. It was that very year in 1959 that Valentino finally set up his own fashion house on the prestigious via Condotti in Rome. A mere 3 years later Valentino made his big breakthrough in Florence, and with the help of trusted friend and former lover, Giancarlo Giammetti, an empire was built.

45 years and innumerable collections later, on January 23rd of this year Valentino decided to bid adieu to the fashion industry forever. While it is true that at 75 Valentino is long overdue for retirement, neither old age nor bad health was the primary factors driving his decision. So who, or what, was the culprit that spurned one the greatest Italian couturiers to leave the art he had devoted his whole life towards interpreting and mastering?

One might be surprised to learn that it was nothing other than big business.

At his final runway show at which his appropriately named “swan song” collection was

"The world of fashion has now been ruined…I became rather bored of continuing in a world which doesn't say anything to me. There is little creativity and too much business."

To some, Valentino’s words may appear to be somewhat hypocritical, as he made his fortune building a business of selling his high end wears to the public. While this may be true, I believe Valentino was justified in making such a statement, as the evidence for his claim can be seen everywhere, from Nordstrom’s to Bloomingdale’s, 5th Avenue to Rodeo Drive. As the desire for companies and investors to generate a profit continues to eclipse the fundamental purpose in which “old generation” designers such as Valentino, Karl Lagerfeld, Giorgio Armani began mastering their craft, little room is left for creative impulses to be expressed. As this occurs the emphasis simultaneously switches from the designer and his or her vision to the consumers and what they demand. With the shift comes a new wave of designers who recognize this shift and subsequently begin designing clothes that all look the same, as they recognize that more of the same sells while the innovation of the new only has the possibility of selling. While Valentino was lucky to have successfully established his stylistic direction and unwaveringly stick to his mantra of “keeping a woman looking her best” as each decade introduced it’s own distinct fads, his successors will be met with many more hurdles as our society leaves little room for up and comers to recreate the old era “before fashion became a global, highly commercial industry” in this new consumer driven era.As firms such as Permira, the British private equity firm that purchased Valentino’s couture house, begin replacing the Valentino’s with the Alessandra Facchinetti’s (a former Gucci designer who is considered to “be better suited to lead the group’s expansion into new markets and product lines”) of the world, I can’t help but feel an immense amount remorse, as something great and inimitable has been forever lost.